When you hear an AI voice laugh, pause, whisper, or choke up — it feels like magic.

But under the hood, it’s not magic. It’s math.

And some of the most fascinating math in modern AI.

Emotional AI voices are the bridge between synthetic and human storytelling.

They’re what make your script sound alive — not robotic.

So let’s unpack what’s actually going on inside that voice you just heard.

The era of “flat” voices is over

The first generation of AI voices sounded like this:

“Hello. I am your AI assistant. I can read your text. With. Pauses.”

Every word was equally weighted. No emotional contour, no rhythm.

That’s because early text-to-speech (TTS) systems were built to pronounce, not perform.

They mapped letters → phonemes → sounds.

Perfect pronunciation, zero soul.

Then came neural TTS models, powered by deep learning.

Instead of predicting sounds individually, they learned patterns of speech — tone, pacing, breathing — from millions of real voice samples.

That’s where emotional AI began.

How emotional voices actually work

Modern emotional voice models — like ElevenLabs v3, Gemini-TTS, and Cartesia Sonic-3 — combine several layers of intelligence:

Text understanding

The model doesn’t just read text — it interprets it. Using a language model backbone, it analyzes context, punctuation, and sentiment.

“I can’t believe you did that” could sound angry, playful, or heartbroken depending on tone cues.

Prosody prediction

Prosody is the melody of speech — pitch, rhythm, and volume over time. Emotional voices predict this dynamically.

They know when to raise the pitch for surprise, or slow down for tension.

Acoustic modeling

This stage turns emotion maps into audio. It shapes breathiness, vocal strain, resonance — even micro-pauses that make speech feel alive.

(Fun fact: most “realistic” AI laughter is actually a blend of phoneme noise plus a prosody spike.)

Style control tags

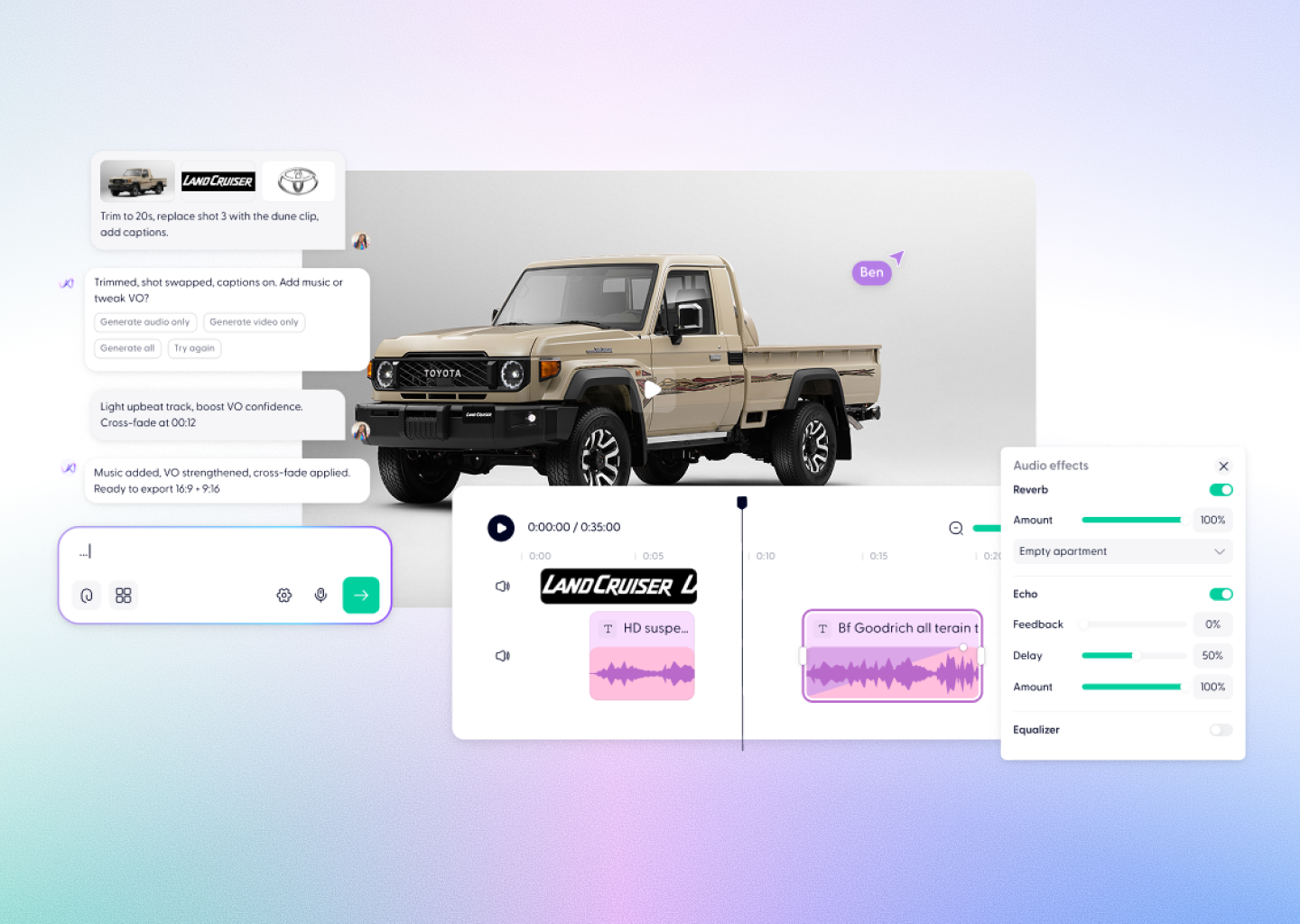

Some models, like ElevenLabs v3 or Wondercraft’s integrated TTS, accept inline tags like:

[excited] We did it! [sad] I thought we never would.

These modify emotion in real time — giving creators film-level control without sound engineering.

But emotion isn’t just in the voice

Here’s where things get interesting:

In Wondercraft, emotion travels beyond the voice.

When you speak through Wonda, your AI creative director, she doesn’t just pick a tone — she builds a multimodal emotional map.

That means:

- The voice modulates based on script cues,

- The face and gestures (in video) mirror those emotional beats,

- And the pacing, camera angles, and music adapt too.

So when your AI actor whispers, the lighting might soften.

When they yell, the camera might shake slightly.

That’s emotional coherence — and it’s what separates storytelling from synthesis.

Why it’s so hard to get right

You can’t fake emotion with filters.

The best emotional voices don’t sound “happier” because the pitch went up — they sound human because they understand why the pitch should go up.

That requires context modeling — something that’s still evolving fast.

For example:

- Cartesia Sonic-3 lets you control emotion through API tags.

- ElevenLabs v3 understands emotional phrasing through inline cues.

- Gemini-TTS builds emotion dynamically from paragraph-level meaning.

Wondercraft integrates all of these into one creative flow — so you don’t have to care which engine runs it. You just say what you want, and Wonda chooses the right emotional layer automatically.

The future of emotional AI

Next-gen models are experimenting with affective memory — voices that remember emotional tone across multiple lines of dialogue, so they don’t reset between sentences.

Others are exploring cross-modal empathy, where a face or background music cue can influence how the voice sounds.

In short, we’re heading toward AI performances, not just AI voices.

And that’s where Wondercraft shines — connecting those systems into one creative experience.

Try creating your own emotional performance. Open Wondercraft Studio, write a few lines, and ask Wonda to “make it emotional.”

You’ll see — or rather, hear — exactly how far emotional AI has come.