Every brand wants to sound human. But as content goes global, human voices don’t scale. Enter AI.

Voice cloning and text-to-speech have made it possible to produce high-quality, multilingual audio in minutes — not months.

Yet for all the progress, one problem remains: most AI brand voices still sound fake.

The solution isn’t just better models.

It’s smarter orchestration — how you combine cloning, translation, and emotion to create something that feels authentically you, in every language.

The rise of the “sonic brand”

Your brand voice isn’t just what you say — it’s how you sound.

According to a 2024 GWI study, 71% of consumers say they’re more likely to trust a brand whose voice “feels human and consistent” across platforms.

That’s why companies like Mastercard, Duolingo, and Headspace have all invested heavily in sonic branding — the strategic use of voice, tone, and sound identity to create recognition.

Traditionally, this meant hiring voice actors, recording thousands of lines, and managing endless localization workflows.

AI has changed that — but only if used correctly.

Step 1: Clone ethically, not endlessly

Voice cloning is the foundation of your brand sound — but it’s not about making a digital copy of your CEO.

It’s about building a signature voice that can evolve with your brand.

Take Duolingo again. They worked with ElevenLabs to scale their cast of voices across languages — using ethically sourced data from real actors, not random scraped audio. This let them maintain consistent tone and character worldwide.

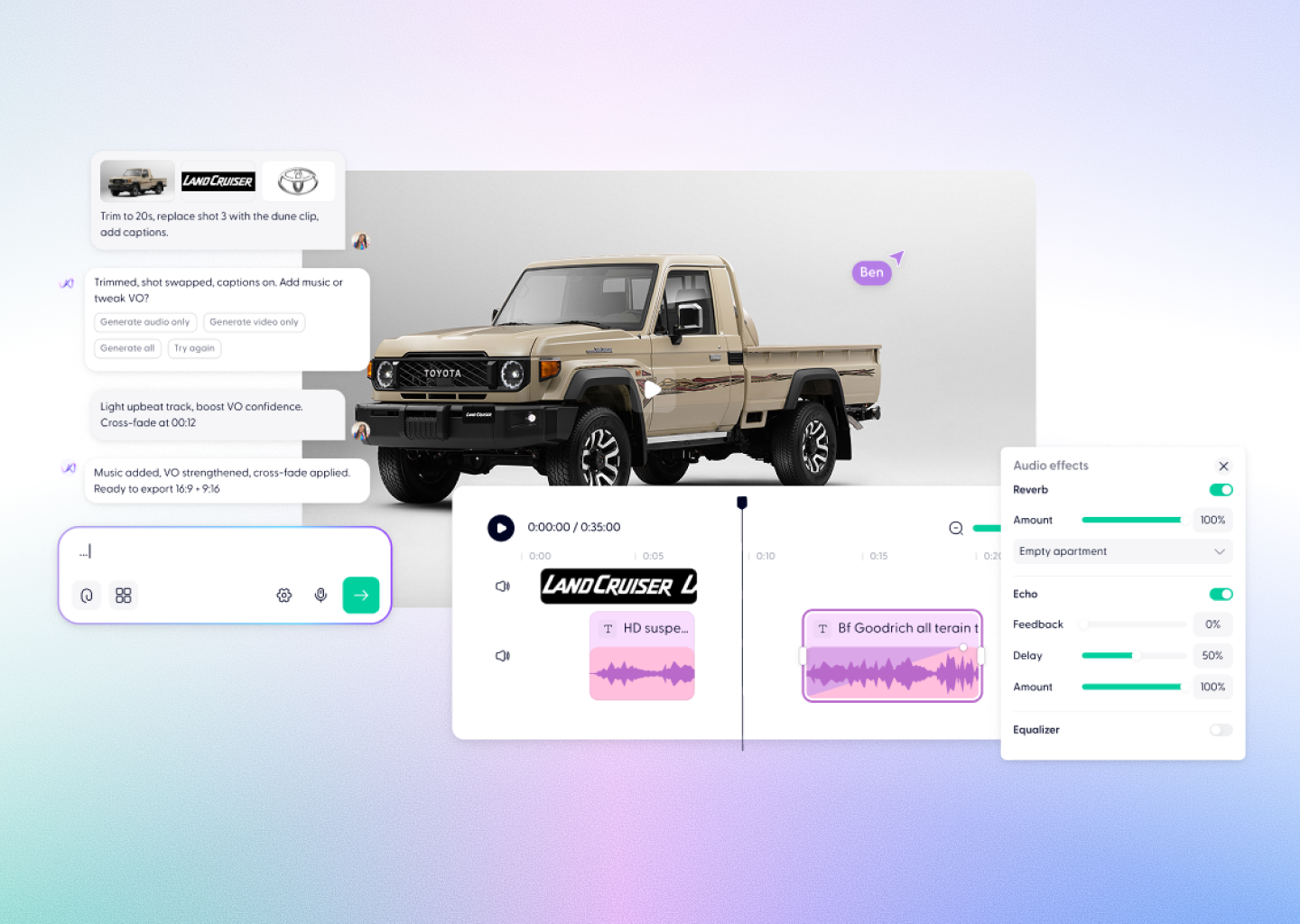

Inside Wondercraft Studio, cloning works the same way:

you can securely upload samples, clone your own or your company’s official voice, and let Wonda, your AI creative director, use that voice across different projects — ads, explainers, or even AI-generated video — all with commercial-safe rights and clear model transparency.

No sketchy datasets. No stolen likenesses. Just your brand, amplified.

Step 2: Translate the emotion, not just the words

Here’s where most AI systems fail. They translate the text perfectly — but lose the tone.

A study from MIT’s Computer Science and AI Lab (CSAIL) found that while modern LLMs like GPT-4 and Gemini excel at literal translation, they often miss emotional cues such as sarcasm, humor, or empathy — elements crucial to brand storytelling.

That’s where Wondercraft’s orchestration comes in. When you ask Wonda to localize a voice or video, it doesn’t just translate — it reinterprets emotional pacing and rhythm for the target language.

Your English script might say:

“We’re thrilled to introduce something new.”

But your Spanish version could sound like:

“Estamos muy emocionados de presentar algo nuevo.”

— with brighter intonation, longer vowels, and a slightly faster tempo to match cultural energy. That’s not just translation. That’s localization through emotion.

Step 3: Combine cloning + TTS for scale

Even the biggest brands can’t rely on one cloned voice forever. The best strategies blend voice cloning for core identity and high-quality TTS for variation.

Example:

- Coca-Cola’s “Create Real Magic” campaign used cloned voiceovers for key personalities, while using expressive TTS to generate hundreds of local ad variations.

- Spotify’s AI DJ uses cloned voice inflection from real presenters, layered with multilingual TTS for dynamic transitions.

In Wondercraft, this is seamless. You can use your cloned voice for intros and branding, while letting Wonda assign natural-sounding AI voices for dialogue, training content, or international rollouts — all in one timeline.

That means you can publish in 10 languages without managing 10 different projects.

Step 4: Keep your brand emotionally consistent

The challenge isn’t sounding the same in every market — it’s sounding authentic everywhere. That’s why emotional modeling matters.

Platforms like Cartesia Sonic-3 and Google Chirp 3 HD are pushing expressive speech far beyond robotic monotones — but they still require human direction.

That’s where Wonda steps in. It understands your brand tone — calm, confident, playful, or cinematic — and applies it across scripts, voices, and visuals.

You can literally tell, “Make the German version sound more relaxed but keep the same energy,” and it will adjust pitch, speed, and emphasis automatically.

It’s like having a creative director who speaks every language — and never forgets your brand book.

Step 5: Stay transparent

Voice ownership is becoming a serious issue.

In 2025, both the EU AI Act and U.S. Copyright Office began introducing rules for synthetic voice disclosure and provenance tracking.

Wondercraft was built with that in mind.

Every voice or video you create is traceable to its source model, ensuring commercial safety and ethical compliance — something most standalone tools don’t offer.

Because in the new creative economy, trust is production value.

The new sound of global creativity

The future of brand storytelling isn’t about replacing human voices — it’s about giving them global reach.

With the right combination of cloning, emotion, and orchestration, your voice can travel anywhere — without losing what makes it yours.

And with Wondercraft, that process finally feels human again.

You don’t have to understand APIs or fine-tune models.

You just talk to Wonda, and it does the rest.

Start building your global brand voice today. Head to Wondercraft Studio and ask Wonda to “translate this into five languages — but make it sound like us.”

You’ll see how fast your voice can go global.