If you’ve ever tried to add an AI-generated voice to a video, you’ve probably run into the same problem everyone does. The voice sounds great — expressive, clear, natural — but when you lay it over the visuals, something feels… off.

The mouth movements don’t quite line up. The pacing feels rushed. The pauses don’t land where the emotion should. It’s technically synced, but emotionally wrong.

That gap — between alignment and believability — is one of the hardest things to get right in AI video creation.

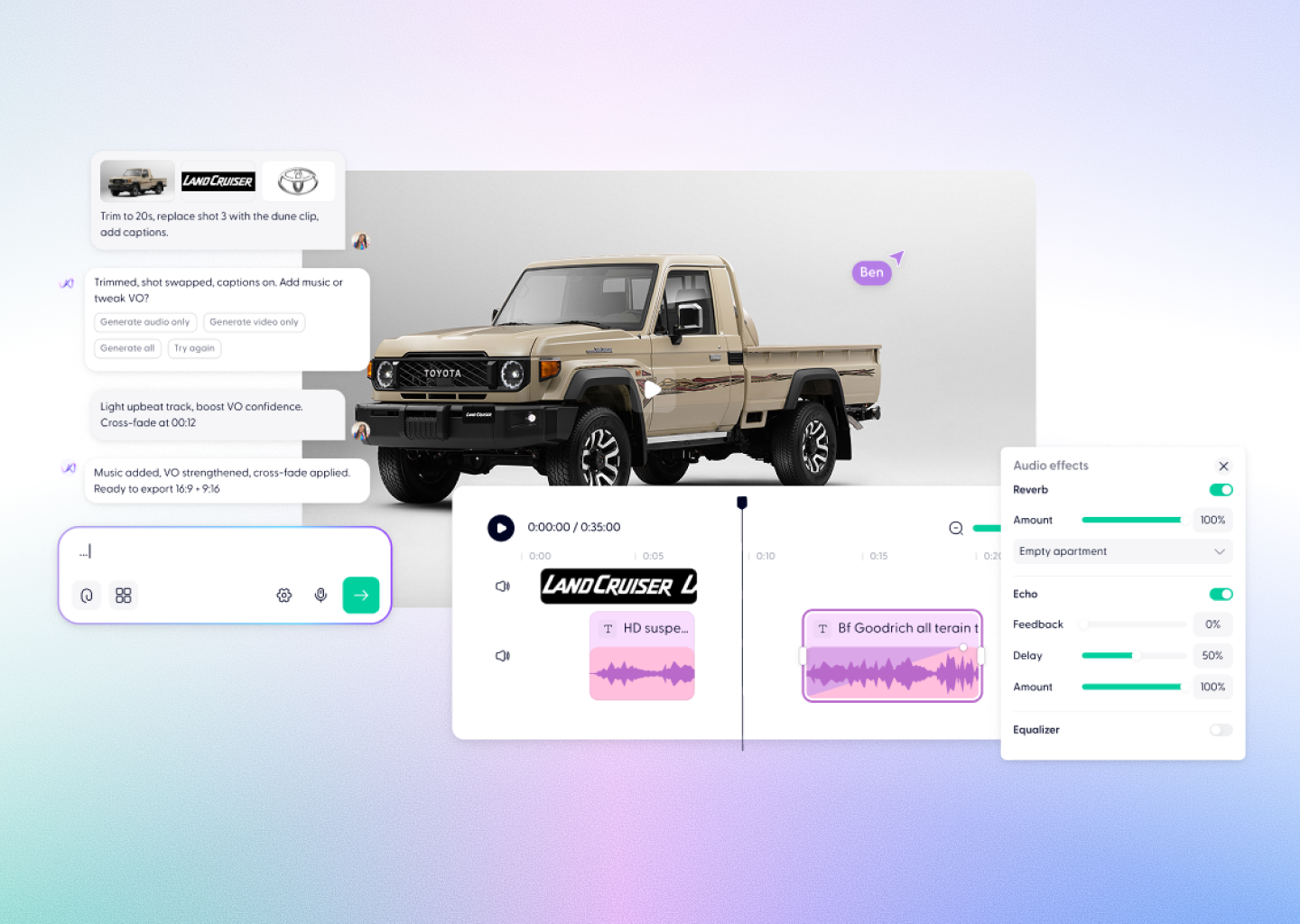

Let’s look at why that happens, how the best new models approach it, and how Wondercraft lets you get perfect voice-to-video sync in a few minutes — no editing timeline, no post-production, no guesswork.

Why Sync Is So Hard for AI (and Humans)

When humans talk, we don’t speak in precise beats. We speed up, pause, laugh, sigh. Our rhythm is emotional, not mathematical.

Most older “AI lip-sync” systems treated speech like a pattern of phonemes: if the audio had an “ah” sound, the mouth should open. Simple — but mechanical.

Modern AI voices — from ElevenLabs, Play.ht, and others — generate speech more like a performance than a transcription. They improvise pacing, emotion, and tone. That makes them sound real… and impossible to sync perfectly with static visuals.

Even professional editors know the frustration. You can drag waveforms frame by frame, but the results still look wrong if the energy doesn’t match the moment.

This is why new-generation models like Runway Gen-3, Kling, or VEED Fabric focus heavily on motion and expression. And why Wondercraft doesn’t just “add” a voice to video — it understands both at once.

How Modern AI Fixes the Problem

The new standard is what researchers call multimodal alignment — instead of treating audio and video separately, AI learns to interpret them together.

It reads how a face moves, where a sentence stresses, when the camera cuts. Then it aligns not just words to frames, but emotion to motion.

That’s why, when you upload a video in Wondercraft, Wonda — the built-in AI creative director — doesn’t just attach your chosen voice. It analyzes the video’s rhythm and matches speech naturally: slowing down during long shots, pausing when the subject turns, even matching tone to visual energy.

It’s the kind of sync that feels invisible — because it feels human.

How to Do It Step-by-Step

Start in Wondercraft Studio.

It works whether you already have a video or you’re starting from scratch.

1. Upload or generate your video.

If you already have footage, drop it in.

If not, you can generate one directly in Wondercraft using models like Runway, Pixverse, or Kling — all integrated in one place.

2. Add your voice.

You can clone your own voice, choose from hundreds of pre-built AI voices, or have Wonda create one based on a description:

“I want a calm, confident female narrator with a slight British accent.”

The free plan even includes monthly credits for AI voice and video generation — enough to test and experiment without paying a cent.

3. Sync them automatically.

Once you’ve got your voice ready, just tell Wonda what to do:

“Sync this narration perfectly with the video timing.”

“Add natural pauses between sections.”

Behind the scenes, Wondercraft aligns every word to the motion in your clip. You’ll see it adjust pacing in real time — tightening when the visuals are fast, easing when they slow down.

4. Refine conversationally.

If you want more control, you don’t need to open an editing timeline. You can just chat:

“Add a half-second pause before the word ‘now’.”

“Make the tone slightly more dramatic during the close-up.”

Every change happens instantly — no rendering delays, no manual syncing.

5. Add sound and texture.

Voice sync isn’t only about the mouth; it’s about presence. You can ask Wonda to add ambient sound, light music, or reverb that matches the rhythm of the voice.

“Add soft city noise between sentences.”

“Bring in background music that fades out before the last line.”

It feels like directing — not editing.

Why Wondercraft Gets Sync Right

Most AI tools still make you jump between platforms: one for video, another for voice, another for mixing. Wondercraft removes that friction.

It connects directly with the best models — ElevenLabs for voices, Runway and Kling for visuals, Topaz for enhancement — and runs them through a unified creative flow. You don’t have to worry about file formats or timing issues; everything happens inside the same Studio.

And unlike typical free AI tools, Wondercraft gives every user free monthly credits to generate voice and video together — not just previews, but real usable outputs.

You can start with video, or start with a voice, or even start with nothing but an idea. Wonda figures out how to connect the pieces and deliver a perfectly synced, emotionally believable result.

Where This Is Going

AI sync is moving fast — not just in lip movement, but in expressive timing. Researchers at Stanford and Adobe recently found that audiences judge realism more by speech rhythm than by visual precision. A perfectly animated mouth with poor pacing feels fake; a slightly imperfect mouth with perfect timing feels real.

That’s exactly where Wondercraft is heading: toward a world where syncing isn’t a technical step, but part of storytelling. Where you describe the scene, the tone, the timing — and it just works.

You can test it right now. Upload a clip. Add a voice. Ask Wonda to sync them. You’ll see why timing, when it’s done right, disappears — and your video finally feels alive. Try it free at app.wondercraft.ai/studio — your free monthly credits are waiting.