You’ve probably seen it: an AI-generated person who looks flawless — skin textures perfect, lips synced, lighting cinematic — and yet, something about it still feels off.

It’s not the realism. It’s the humanity.

That subtle discomfort, the sense that “something’s wrong even though I can’t explain why,” is the uncanny valley — and it’s haunted every major leap in synthetic media. From early Pixar to deepfakes to the latest diffusion models. But that’s starting to change, fast.

Why AI faces still trigger the uncanny valley

Even the best AI video generators—like OmniHuman-1.5, Veed Fabric 1.0, or Kling 2.5—can miss what the brain instinctively expects: micro-expressions, natural timing, and emotional synchronization.

It’s not enough to look human. You have to behave human.

Researchers at ByteDance recently showed that audiences rate AI-generated performances as “unnerving” when facial motion doesn’t line up with emotional prosody—the rhythm, stress, and melody in speech.

In plain terms: the model hears what’s said but not how it’s meant.

That’s why so many talking-head videos feel robotic. They get the words right, not the intention behind them.

What’s changing: cognition inside the model

Next-generation models are no longer treating animation as “lip motion matching.”

They’re building a form of cognitive simulation—a tiny internal reasoning loop that decides why a character moves, not just when.

- OmniHuman-1.5 uses a dual-system architecture (slow deliberate reasoning + fast instinctive motion) to simulate intent behind gestures.

- Gemini-TTS adds semantic awareness to audio generation, interpreting whether a line is sarcastic, anxious, or joyful before rendering tone.

- Fabric 1.0 from VEED applies “emotional tags” like

[laughs]or[excited!]that ripple through the facial animation pipeline, adding natural imperfections.

Each of these systems points toward one thing: AI faces that think before they move.

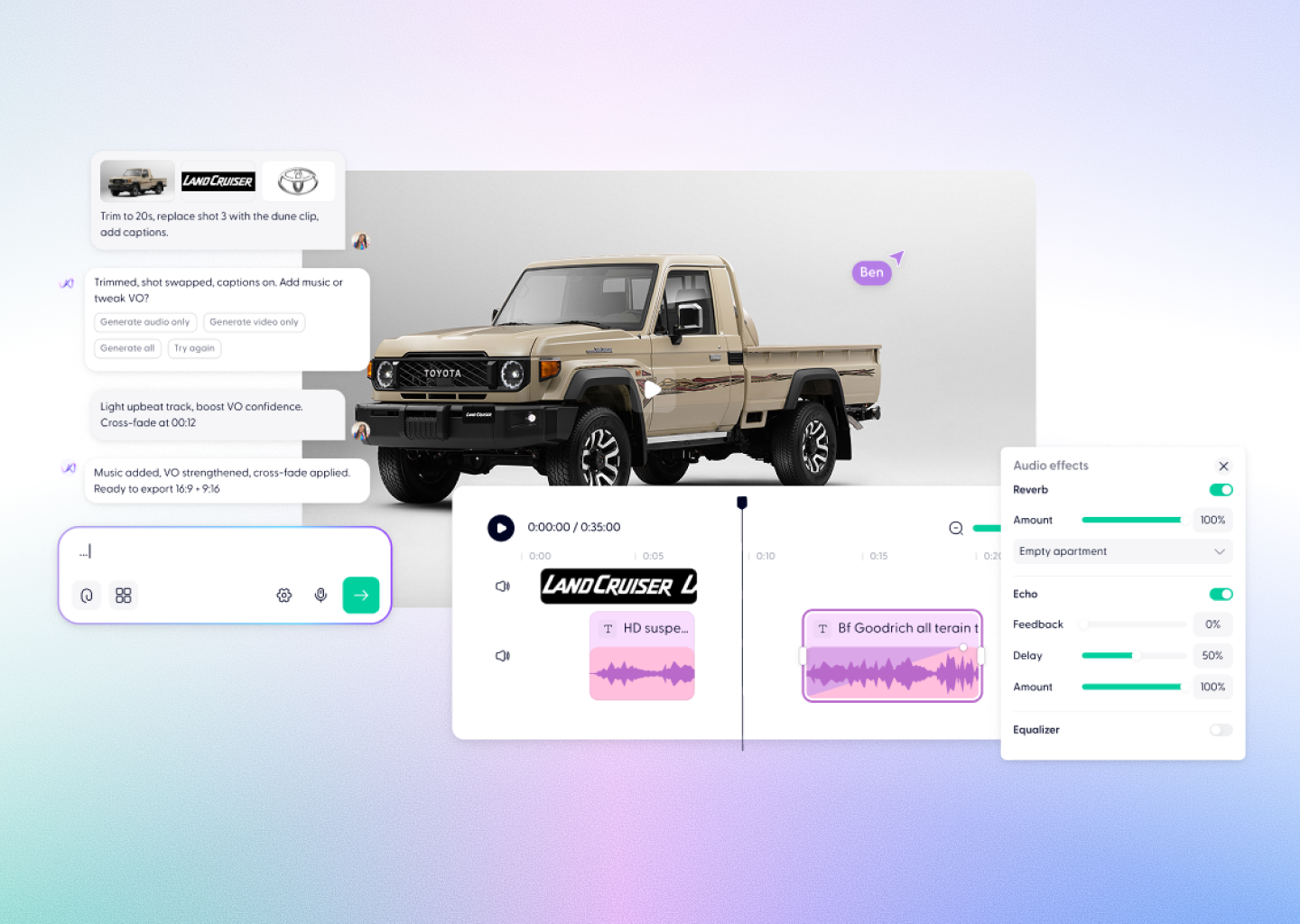

The Wondercraft layer: orchestration and direction

In Wondercraft Studio, we test these models constantly — because realism isn’t one model’s job anymore. It’s the coordination between them.

That’s where Wonda, our built-in creative director, comes in. Wonda doesn’t just pick a voice or sync a lip. It orchestrates every layer — voice, facial animation, emotional pacing — to behave as one performance.

When you say,

“Make her sound confident, but soften the last sentence,”

Wonda doesn’t just change the voice inflection. It adjusts micro-expressions, posture, and timing.

Under the hood, Wonda might call ElevenLabs V3 for emotional voice rendering, Lipsync-2-Pro for precise mouth motion, and Fabric or OmniHuman for full-body animation — seamlessly.

That orchestration is what finally closes the uncanny gap.

Why this matters for creators

If you’re a brand, educator, or content creator, this evolution means you can now produce human-like performance without an actor or a camera.

Imagine:

- A training presenter who maintains eye contact, reacts naturally, and speaks in your brand’s tone.

- A marketing avatar that laughs, pauses, and gestures just like a real spokesperson.

- A story narrator whose emotion actually builds with the scene.

That’s not sci-fi anymore — it’s what’s already happening inside Wondercraft Studio.

The takeaway

The reason AI faces still feel weird is because they’ve been mimicking appearance, not intention.

The reason they’re about to stop feeling weird is because models are starting to understand performance.

That’s the frontier Wondercraft is built for.

One platform. Every model. Directed by Wonda.

If you want to see what “believable AI performance” actually feels like open Wondercraft Studio and create your first AI video just by talking to Wonda.