Let’s try something. Open your camera roll.

Pick one image — any image that feels like it belongs to a larger story. Maybe it’s a portrait, a travel photo, a product shot, or something you’ve drawn.

Now ask yourself: what would this moment look like if it moved?

That single question is what every new generation of AI models is trying to answer — and what Wondercraft lets you experience instantly.

Because in 2026, you no longer need a camera to tell a visual story. You just need an image — and an idea.

Step 1. Look Closer

Take your chosen image and imagine it breathing. If it’s a portrait, notice where the light falls on the face. If it’s a landscape, find where the horizon meets the sky. If it’s a product photo, focus on the reflection that already hints at motion.

These are not random details. They’re the data points AI models use to predict depth, movement, and time.

When models like Runway Gen-3, Kling 2.5, and Pixverse V5 analyze your image, they don’t just “move” it — they simulate physics. They interpret depth of field, light behavior, and natural motion patterns learned from millions of hours of video.

A single photo, to them, is a script. Every shadow, a stage direction. Every highlight, a cue.

Step 2. Add Intention

Now, think about tone. What do you want to feel when this image comes alive?

If you say, “make it cinematic,” the AI will use camera motion, contrast, and subtle focus pulls.

If you say, “make it surreal,” it might reinterpret colors, exaggerate motion, or stretch geometry in ways that feel dreamlike.

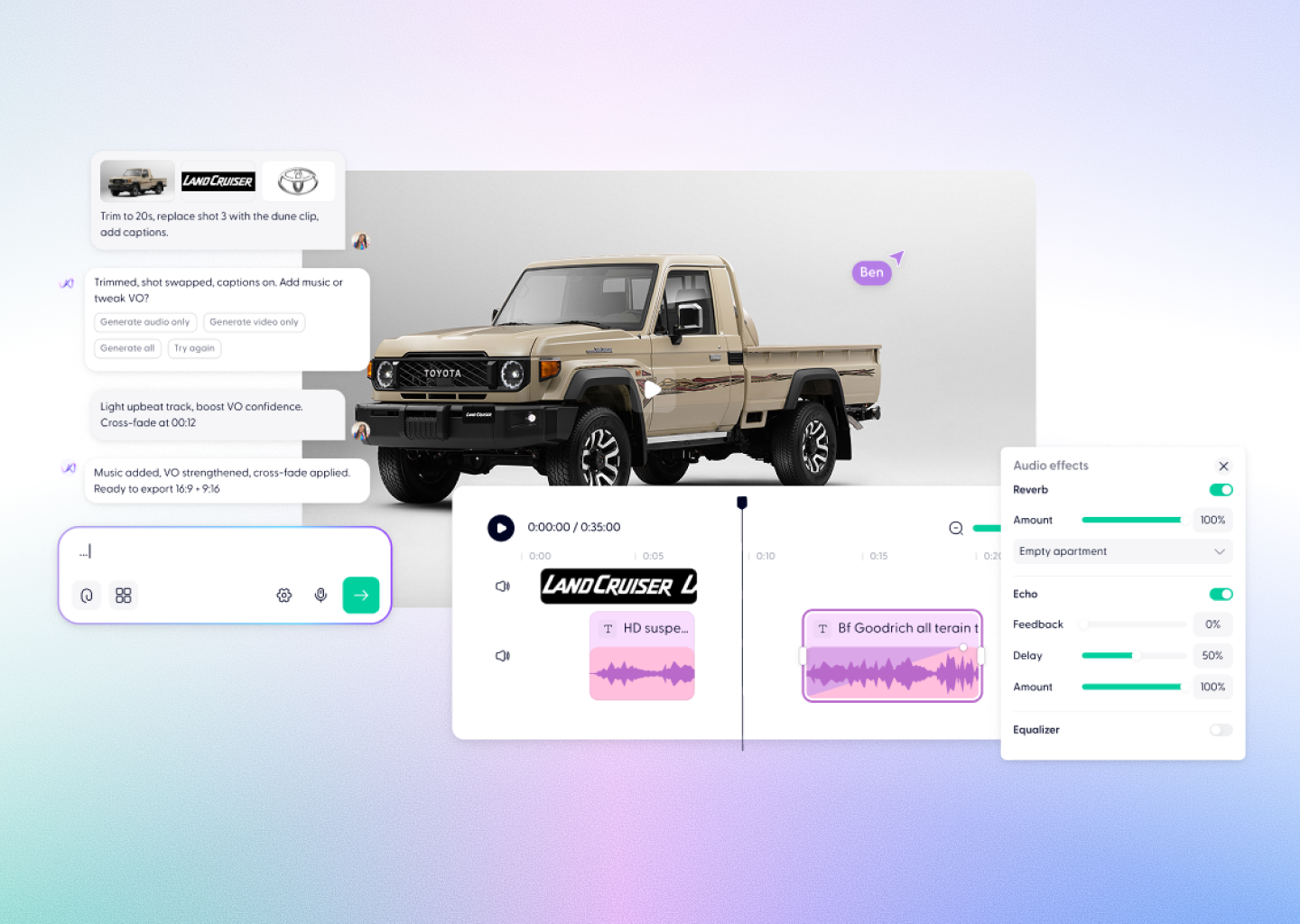

The model isn’t making art for you — it’s responding to your intention. That’s the creative partnership that Wondercraft enables through Wonda, its conversational creative agent.

Instead of juggling multiple apps, prompts, and interfaces, you simply tell Wonda what you’re trying to evoke. It then routes your image through the right combination of models: Runway for realism, Pixverse for style, Kling for physicality, Topaz for enhancement.

You describe the story. Wonda handles the pipeline.

Step 3. See It in Motion

Imagine you’ve uploaded a portrait of someone sitting in a car. You could tell Wonda:

“Add a warm sunset glow. Make the camera slowly track forward through the window.”

Behind the scenes, the Studio breaks that request into components — lighting adjustment, camera simulation, temporal diffusion — and generates a 5-second clip that looks like it came from a real film.

Now take it further:

“Extend the shot. Make her smile slightly. Add soft instrumental background.”

Wonda applies motion diffusion, regenerates the next sequence, and aligns the sound with the visual rhythm. What you see is not a static loop. It’s a story arc forming from a single frame. And you never once opened a timeline editor.

Step 4. Understand the Science

In a 2025 MIT Media Lab study, participants rated image-to-video generations 34% more engaging than static images and 19% more than AI videos created from text prompts alone. Why? Because starting with an image grounds the model in physical truth.

Diffusion models trained on text often guess what a “scene” should look like. But when they start from a real image, they already have the structure of reality — the geometry, lighting, and composition are baked in.

That’s why continuing from an existing frame produces better results than generating from scratch.

In Wondercraft, you can reuse that same image or clip for multiple scenes. Wonda recognizes it automatically, preserving consistency so the same person, product, or background carries across videos.

It’s not just convenient; it’s how real stories maintain continuity.

Step 5. Build the Narrative

Now that your image is moving, what if you added a voice? A line of dialogue. A whisper. A sound cue.

This is where Wondercraft blurs boundaries — you can bring in audio from ElevenLabs or Wondercraft’s built-in voice tools, add subtitles, and use Wonda to time every cue to motion. You can even layer multiple voices or perspectives.

Imagine turning a still product photo into a narrated mini-ad. Or an old family picture into a reflective short film.

You’re not using AI to generate content. You’re using AI to direct meaning.

Step 6. Think Bigger

Once you’ve seen your image move, it’s hard to unsee the potential.

Fashion brands are animating lookbook photography into moving campaign videos. Artists are transforming character sketches into cinematic reels. Educators are converting textbook diagrams into short explainers. And filmmakers are using Wondercraft to prototype storyboards that feel real before shooting a single frame.

In each case, one thing remains constant: It starts with an image. It becomes a sequence. And through direction, it turns into a story.

The Creative Future

AI video generation isn’t replacing creativity — it’s changing where it begins.

Instead of starting with equipment or software, creators now start with imagination. And instead of spending hours cutting footage, they can describe it conversationally and see it materialize.

The future of creation won’t belong to those who master tools. It will belong to those who master intent.

That’s what Wondercraft is built for — a studio where intent becomes direction, and direction becomes video.

So the next time you take a photo, pause for a second and look closer. Because you’re not just capturing an image anymore. You might be capturing the opening scene of your next story.

Try it yourself — upload a single image at Wondercraft Studio and tell Wonda what happens next.